Copyright © 2004 Eric S. Raymond

This book and its on-line version are distributed under the terms of the Creative Commons Attribution-NoDerivs 1.0 license, with the additional proviso that the right to publish it on paper for sale or other for-profit use is reserved to Pearson Education, Inc. A reference copy of this license may be found at http://creativecommons.org/licenses/by-nd/1.0/legalcode.

| Revision History | ||

|---|---|---|

| Revision 0.1 | April 18 2004 | esr |

| Start of book | ||

Table of Contents

- An Invitation to Usability

- 1. Premises: The Rules of Interface Design

- Usability Metrics

- Usability and the Power Curve

- The Rules of Usability

- Rule of Bliss: Allow your users the luxury of ignorance.

- Rule of Distractions: Allow your users the luxury of inattention.

- Rule of Flow: Allow your users the luxury of attention.

- Rule of Documentation: Documentation is an admission of failure.

- Rule of Least Surprise: In interface design, always do the least surprising thing.

- Rule of Transparency: Every bit of program state that the user has to reason about should be manifest in the interface.

- Rule of Modelessness: The interface's response to user actions should be consistent and never depend on hidden state.

- Rule of Seven: Users can hold at most 7±2 things at once in working storage.

- Rule of Reversibility: Every operation without an undo is a horror story waiting to happen.

- Rule of Confirmation: Every confirmation prompt should be a surprise.

- Rule of Failure: All failures should be lessons in how not to fail.

- Rule of Silence: When a program has nothing surprising to say, it should say nothing.

- Rule of Automation: Never ask the user for any information that you can autodetect, copy, or deduce.

- Rule of Defaults: Choose safe defaults, apply them unobtrusively, and let them be overridden if necessary.

- Rule of Respect: Never mistake keeping things simple for dumbing them down, or vice-versa.

- Rule of Predictability: Predictability is more important than prettiness.

- Rule of Reality: The interface isn't finished till the end-user testing is done.

- Comparison with the Nielsen-Molich Evaluation Method

- Identifying with the User Experience

- 2. History: A Brief History of User Interfaces

- 3. Programming: GUI Construction in the Unix Environment

- 4. Wetware: The Human Side of Interfaces

- 5. Examples: The Good, the Bad, and the Ugly

- 6. Reality: Debugging and Testing User Interface Designs

- A. Design Rule Reference

- B. Bibliography

List of Figures

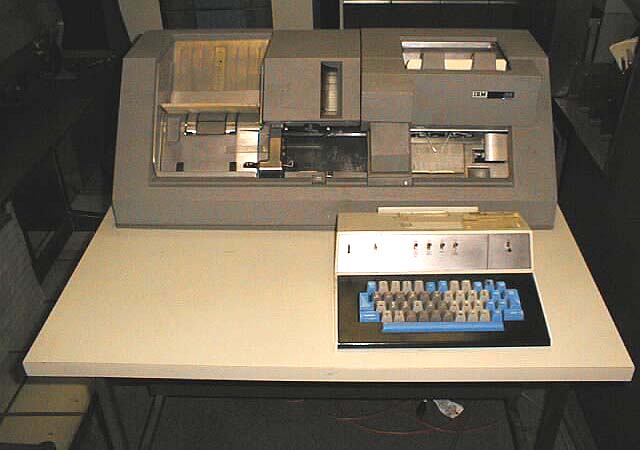

- 2.1. IBM 029 card punch.

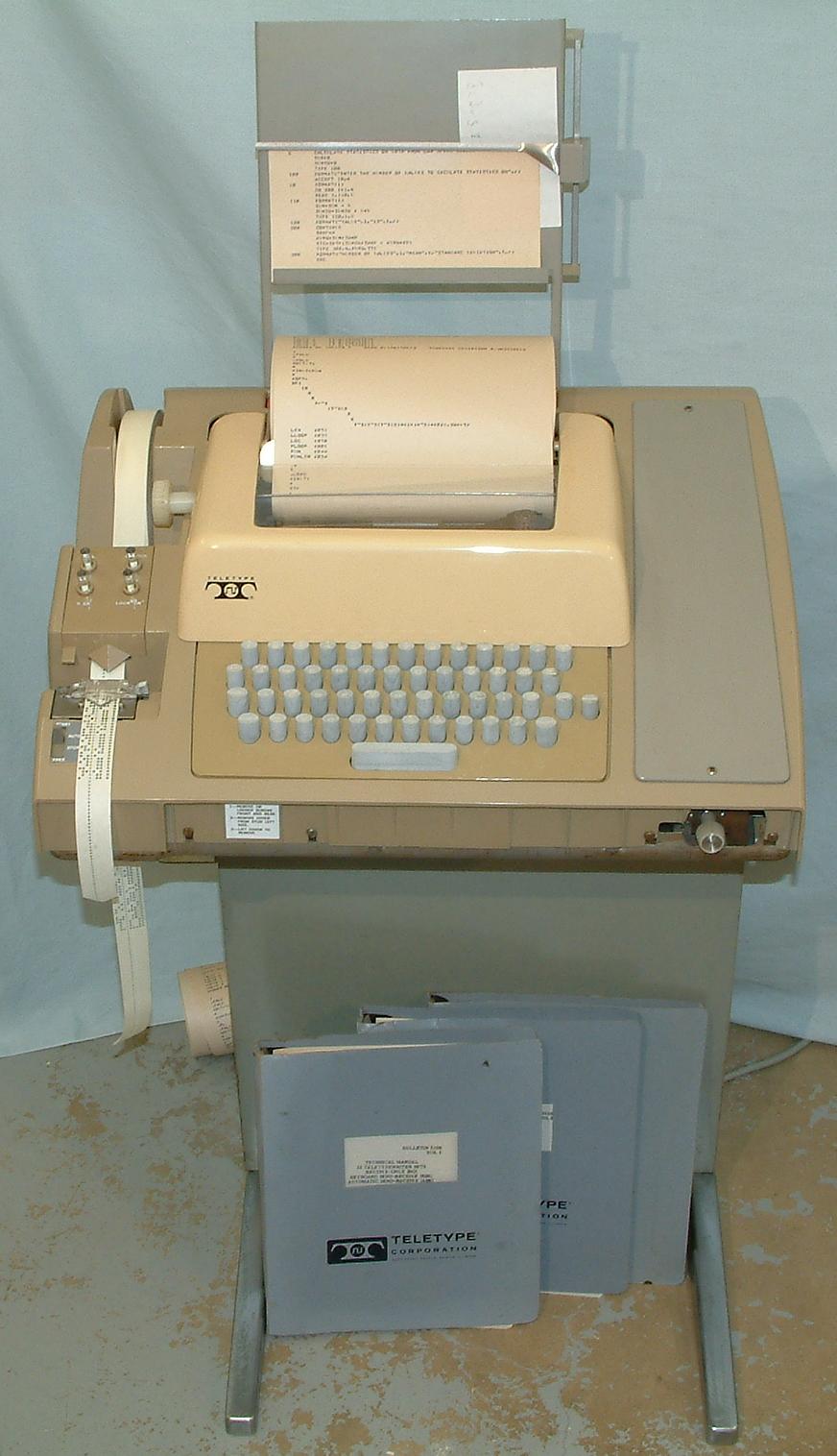

- 2.2. ASR-33 Teletype.

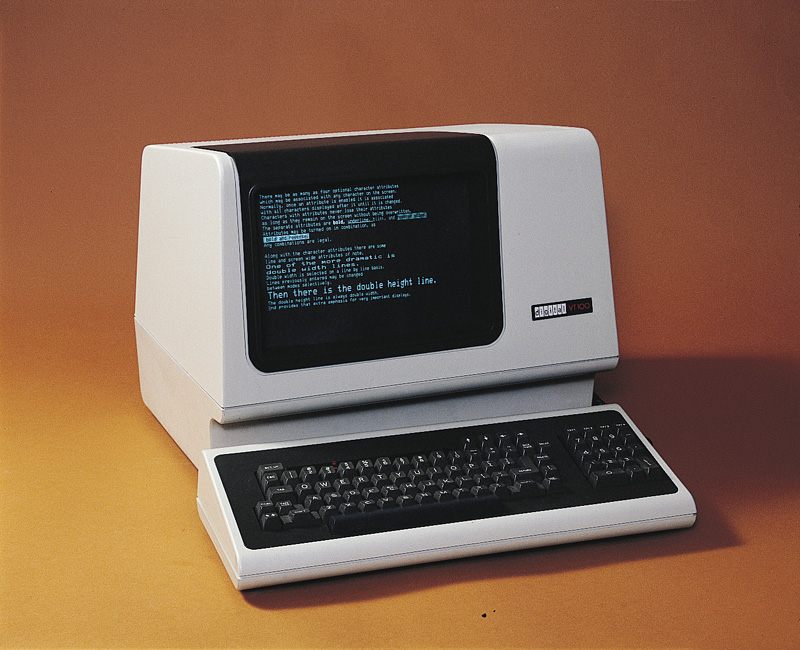

- 2.3. VT100 terminal.

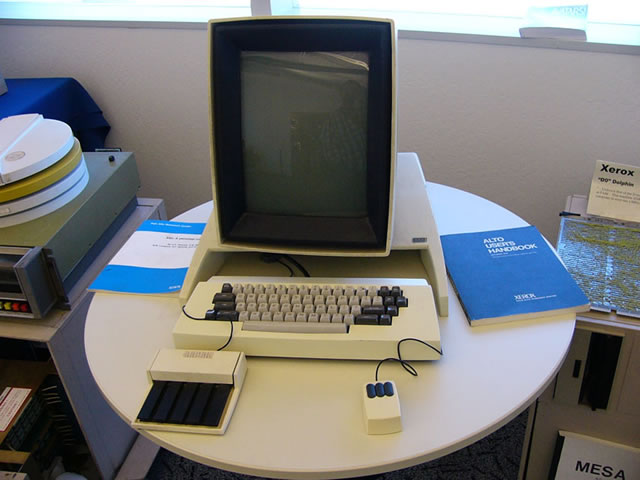

- 2.4. The Xerox Alto.

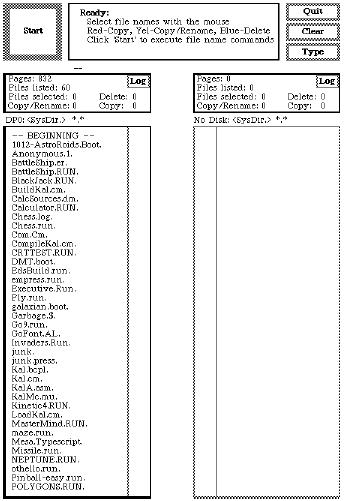

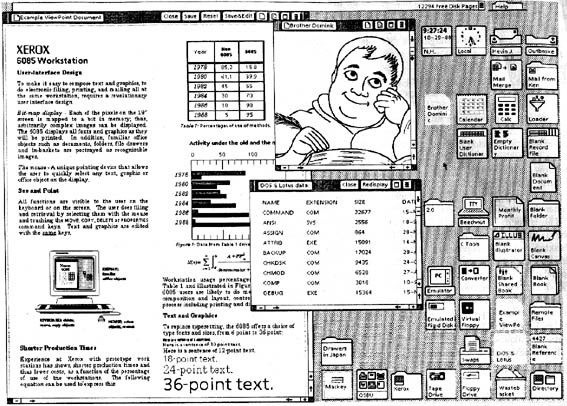

- 2.5. Alto running the Executive file browser (c.1974).

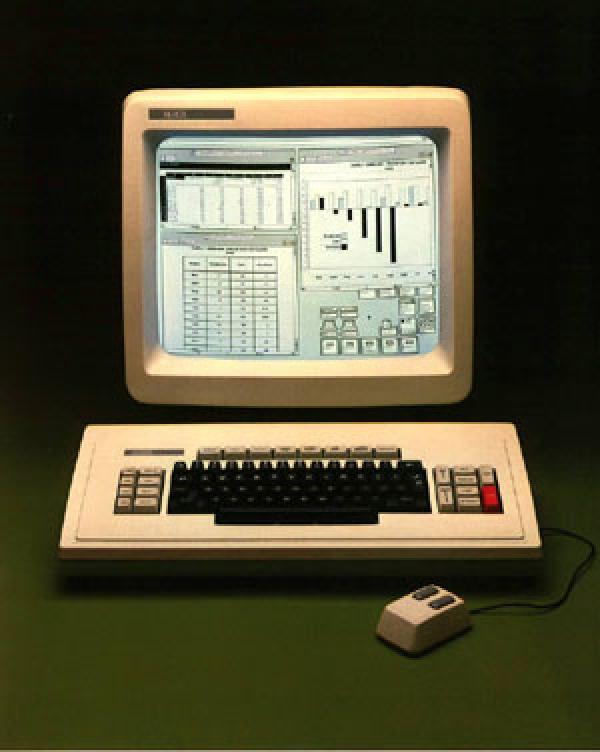

- 2.6. The Xerox Star (1981).

- 2.7. Screen shot from a Star (1981).

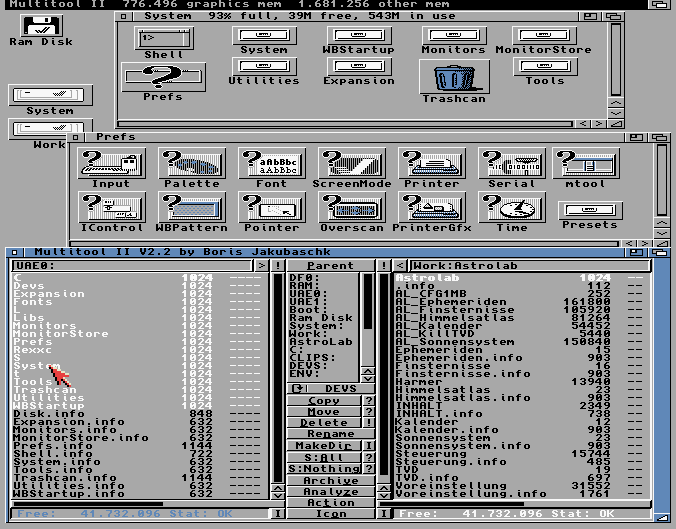

- 2.8. Kickstart on the Amiga 1000 (1985).

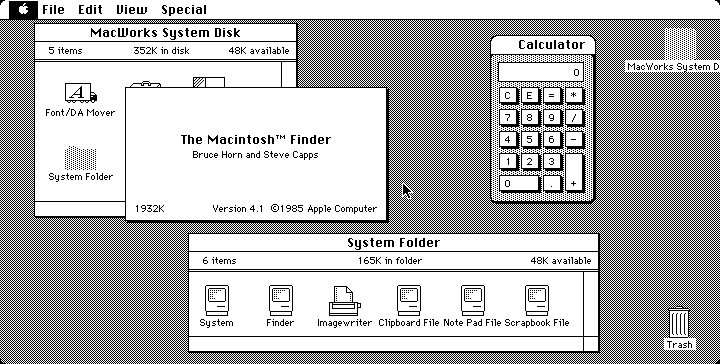

- 2.9. Early version of the Macintosh Finder (1985).

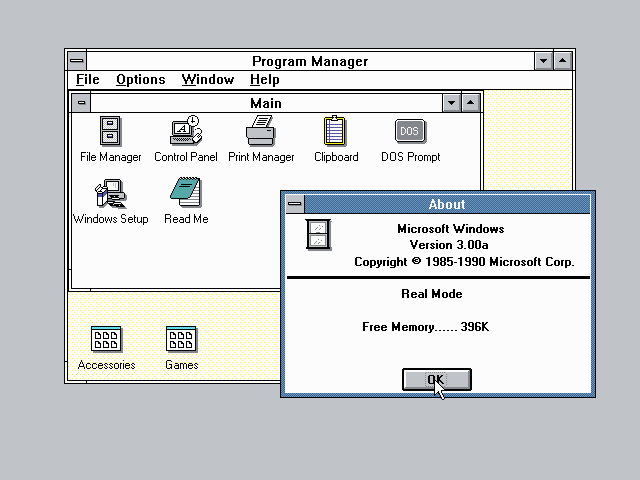

- 2.10. Windows 1.0 (1985).

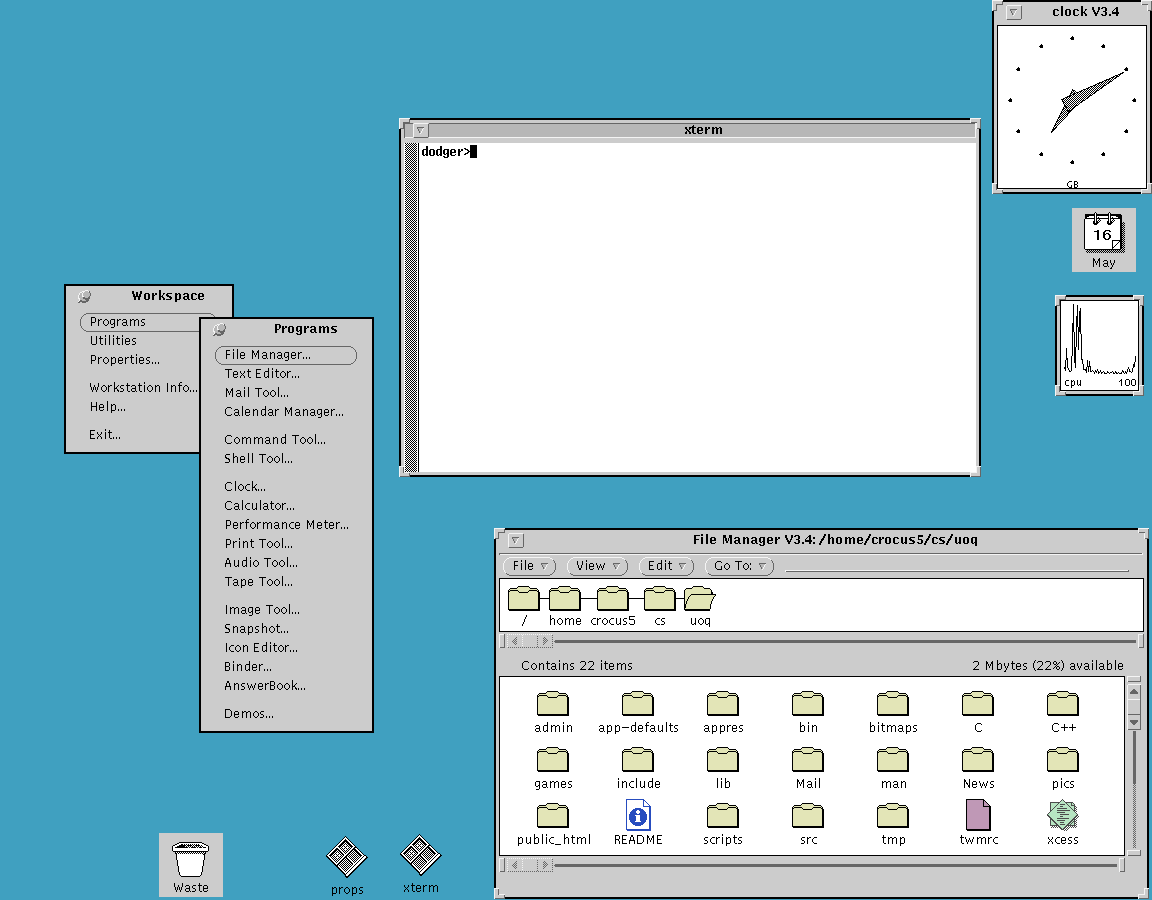

- 2.11. OpenLook (c.1989).

- 2.12. NeXTstep (1988).

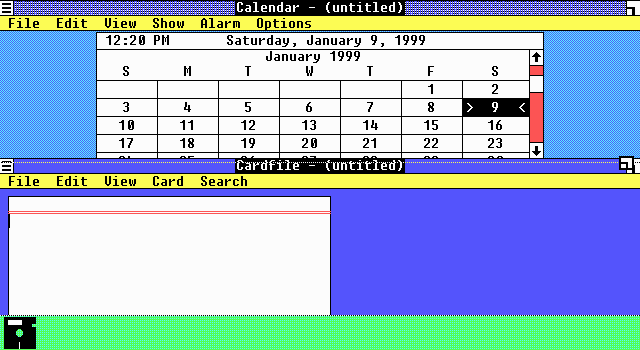

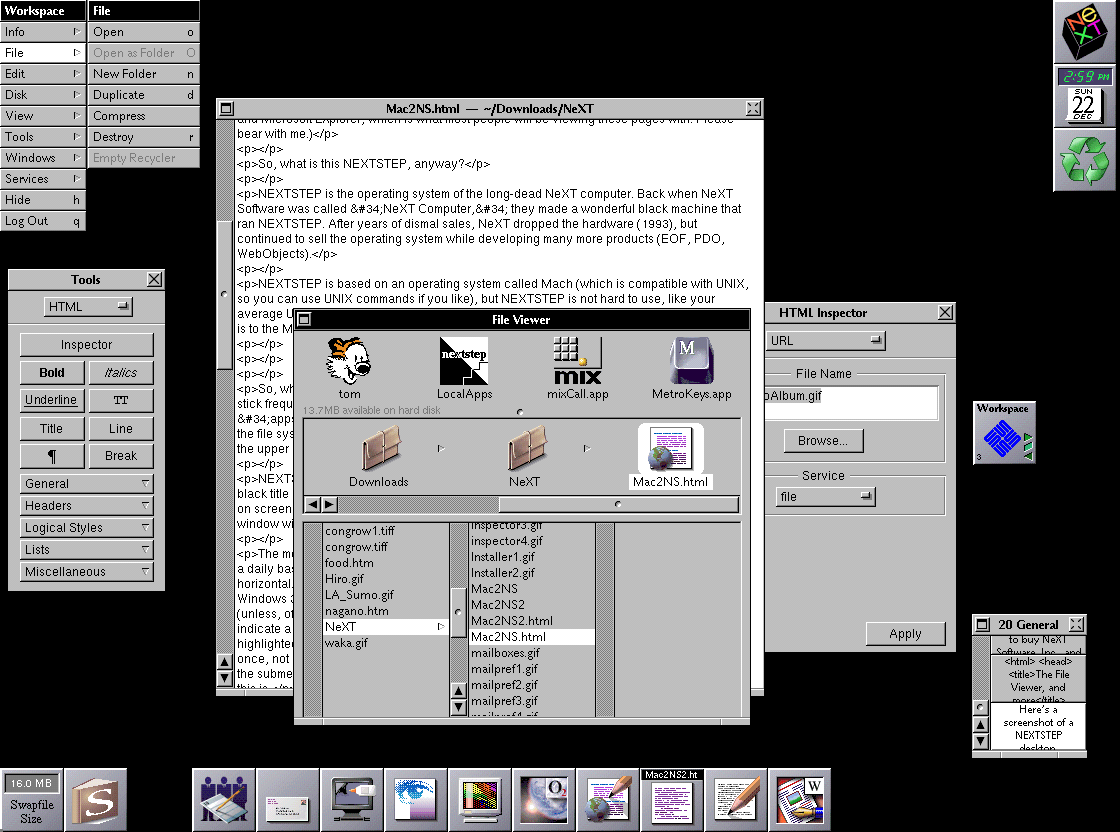

- 2.13. Windows 3.0 and 3.1 (1992).

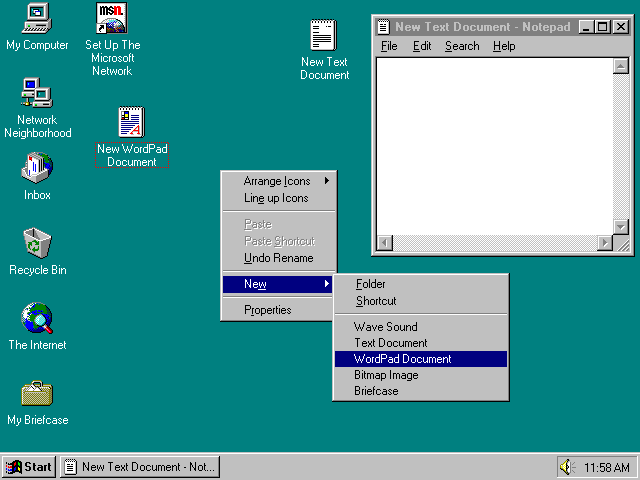

- 2.14. Windows 95 (1995).

- 2.15. Microsoft Bob home screen (1995).

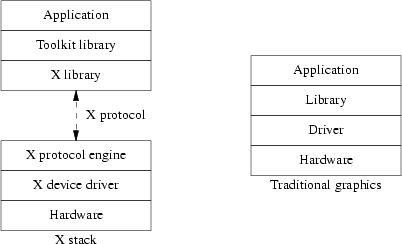

- 3.1. Comparing the X stack to a monolithic graphics system.

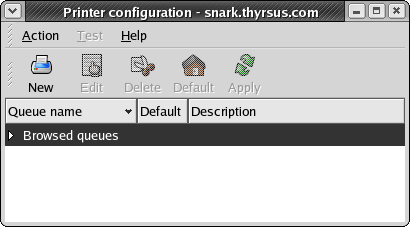

- 5.1. CUPS configuration: the saga begins

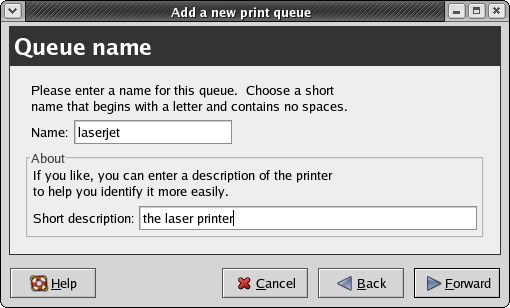

- 5.2. CUPS configuration: the Queue Name panel

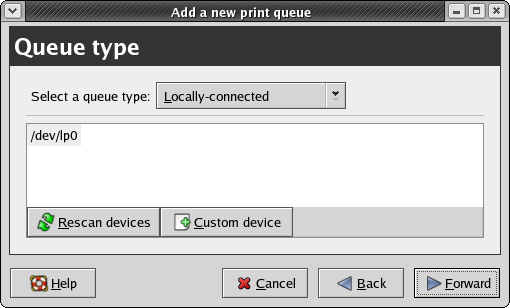

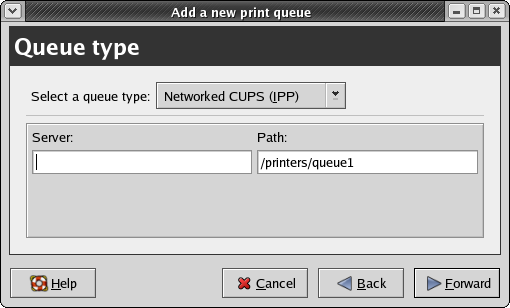

- 5.3. CUPS configuration: the Queue Type panel

- 5.4. CUPS configuration: the stone wall

- 5.5. xmms

- 5.6. xine-ui

- 5.7. gxine

List of Tables

What I saw in the Xerox PARC technology was the caveman interface, you point and you grunt. A massive winding down, regressing away from language, in order to address the technological nervousness of the user.

-- Attributed to an IBM technician lambasting the Apple Lisa, c. 1979This book is about software usability engineering for Unix programmers, the presentational side of Unix software design. Its scope includes not only user interface (UI) design, but other related challenges such as making software easy to install, learn, and administer.

We choose the term “usability engineering” as a deliberate challenge to the all-too-prevalent notion that designing for usability demands special insight into areas like psychology or visual arts that are outside the scope of what programmers can do. We think the belief that usability engineering is a form of arcane magic best left to the exclusive province of specialists is both false and harmful, and is a passive but potent encouragement to slovenly interface design.

Just as damaging is the opposite assumption that usability engineering is trivial, and that usability can be sprayed on to a project after the design phase as though it were a coat of lacquer. The belief that security can be treated that way has caused no end of trouble as the Internet became a mass medium; similarly, the costs of after-the-fact attempts to spray on usability are rising as the potential audience of Linux and other Unixes expands to include more and more non-technical users.

We reject both extremes for the position that usability engineering should be considered a normal part of software design. There will always be a role for usability specialists, but basic competence in usability engineering can and should be part of every programmer's craft — even every Unix programmer's craft.

This book is aimed specifically at programmers operating within the Unix tradition, and especially for developers on modern open-source Unixes such as Linux. In The Art of Unix Programming we described the things Unix programmers do particularly well. In this book, on the other hand, we're going to delve into things Unix programmers have a long history of doing especially poorly. We think that deficit can and should be repaired.

Why start with Unix? The proximate cause is that the authors of this book understand how to address Unix programmers; we're writing for the audience we know. But more generally, we think that a book about usability engineering for Unix can be especially valuable because the Unix culture gets almost everything else right.

It has been famously claimed that all art aspires to the condition of music. If that's so, all operating systems seem to aspire just as insistently to the condition of Unix! One of the most persistent of the repeating patterns in the history of the field is large pieces of Unix getting grafted onto other environments (famously including Microsoft DOS, Microsoft Windows, and Mac OS 9) after their designers have run head-on into the limits of their original architectural model.

The planners behind Macintosh OS X noticed this, and concluded that it would work better to start from the solid architectural foundations of Unix and fix Unix's usability superstructure than to start from a highly usable but structurally weak operating system like Mac OS 9 and try to repair the foundations. The remarkable success of Linux at competing with Windows after 1995 suggests a similar conclusion.

As with architectures, so with architects. We believe Unix programmers who manage to integrate usability engineering into their craft will write fundamentally better software than usability-savvy programmers from other traditions trying belatedly to absorb Unix's architectural lessons in other areas like stability, security, and maintainability.

In the past, much of existing literature on software usability engineering has been tied to a specific non-Unix environment, or for other reasons written in an idiom that Unix programmers have trouble understanding. We began this book with the goal of bridging that gap.

We are aware that we are fighting a considerable weight of history in our attempt. Historically, hard-core Unix programmers have tended to be systems and networking hackers with little interest in — even an active disdain for — end-user applications and user interfaces. More specifically, the Unix culture has a long tradition of dismissing graphical user interfaces with the sour attitude recorded in the epigraph of this chapter.

In The Art of Unix Programming[TAOUP], we argued vigorously that there is more than a little justice in the anti-GUI case, and that Unix's tradition of command-line and scriptable interfaces has powerful virtues which have often tended to get lost in waves of consumer-oriented hype. But we think the rise of the Unix-centered open-source movement is making a big difference here. It has certainly made many people outside the Unix camp more willing than ever before to listen to the case for the old-school Unix gospel of the pipe, the script, and the command line. And we believe that it has made Unix programmers themselves less prickly and defensive, more willing to accept the best from the GUI tradition rather than dismissing it as gaudy trash. Modern open-source Unixes like Linux are observably GUI-intensive to a degree that would have been barely imaginable even a decade ago, and younger Linux programmers especially seem to be trying to find an accommodation that discards neither the GUI nor the best of the Unix old school.

We think, or at least hope, that the Unix community is ready to develop a healthy indigenous tradition of GUI design, one which does not merely imitate older models but integrates them with Unix's native design rules and best practices. We see promise for this project in the fact that Unix has often led the way in GUI technology and infrastructure even as it lagged badly in GUI design. We hope that this book will help systematize the ideas and insights that Unix community has been developing for itself and borrowing from elsewhere as it has slowly struggled to come to terms with the GUI.

More generally, we have written this book because we think we have detected signs that today's Unix community is ready to seriously take on usability issues — to accept them a challenge worthy of the same tremendous intelligence and effort that it has brought to its more traditional infrastructure concerns.

Our exposition will build on [TAOUP]). That book was an informal pattern language of Unix design, capturing Unix practice as it is. Much of it was concerned with developing a vocabulary (centered around terms like transparency, orthogonality, and discoverability) in which the implicit knowledge of the Unix tradition could be made explicit. In this book, we'll extend that vocabularity into the domain of usability engineering.

We'll use a combination of case studies with analysis similar to that in [TAOUP], and on a few topics we'll reuse bits of that book.[1] However, the emphasis in this book will be very different. Where discussion of interfaces in [TAOUP] was primarily geared to explaining the persistence of non-visual interfaces for sophisticated users under Unix, we will be focusing here on the design of interfaces for nontechnical end-users, and specifically on graphical user interfaces (GUIs).

As with other aspects of design, usability engineering combines inspiration with learned skill. We can't supply the inspiration, but we can help you learn a vocabulary, some tools, and some technique.

One final note: The world is full of books about software design that urge programmers to swallow heavy methodological prescriptions like a dose of castor oil, grimly admitting that the stuff tastes nasty but insisting it's good for your character. This book is not one of those. We believe that one of the characteristics of great software designs, whether in the internals of code or its user interfaces, is playfulness. We believe that effective software-design methods are playful as well, because pleasure is what human beings experience when they are operating at their highest sustainable levels of effort and creativity.

Accordingly, we hope (and believe) that this book will not only teach you how to be a better programmer, but show you how to have new kinds of fun in the process. So when we say that we hope you'll enjoy this book, it is not just a conventional gesture of fond hope, it's actually our most serious methodological prescription. Enjoy what you learn here, because that's how you'll do a better job.

[1] Specifically, the places where we have reused TAOUP material are in (1) the definitions of terms in the Premises chapter, and (2) the comparison of X toolkits in the Programming chapter. To the reader interested in exploring Unix's native non-GUI design tradition we recommend the Interfaces chapter.

Table of Contents

- Usability Metrics

- Usability and the Power Curve

- The Rules of Usability

- Rule of Bliss: Allow your users the luxury of ignorance.

- Rule of Distractions: Allow your users the luxury of inattention.

- Rule of Flow: Allow your users the luxury of attention.

- Rule of Documentation: Documentation is an admission of failure.

- Rule of Least Surprise: In interface design, always do the least surprising thing.

- Rule of Transparency: Every bit of program state that the user has to reason about should be manifest in the interface.

- Rule of Modelessness: The interface's response to user actions should be consistent and never depend on hidden state.

- Rule of Seven: Users can hold at most 7±2 things at once in working storage.

- Rule of Reversibility: Every operation without an undo is a horror story waiting to happen.

- Rule of Confirmation: Every confirmation prompt should be a surprise.

- Rule of Failure: All failures should be lessons in how not to fail.

- Rule of Silence: When a program has nothing surprising to say, it should say nothing.

- Rule of Automation: Never ask the user for any information that you can autodetect, copy, or deduce.

- Rule of Defaults: Choose safe defaults, apply them unobtrusively, and let them be overridden if necessary.

- Rule of Respect: Never mistake keeping things simple for dumbing them down, or vice-versa.

- Rule of Predictability: Predictability is more important than prettiness.

- Rule of Reality: The interface isn't finished till the end-user testing is done.

- Comparison with the Nielsen-Molich Evaluation Method

- Identifying with the User Experience

In the beginner's mind there are many possibilities, but in the expert's there are few.

-- Zen Mind, Beginner's Mind (1972)Usability engineering is like other forms of art and engineering in that some of what informs the practice is aesthetic intuition, but most of it is following rules. The intercourse between rule-bound form and intuitive spark is exactly where creativity happens; style (as a famous literary dictum has it) is the contrast between expectation and surprise.

Usability specialists often have a tendency to exaggerate the degree of intuition and inspiration involved, in part because they are sometimes unaware of the rules they are following (and some are not aware of following rules at all). But the rules of good usability engineering that have emerged from our experience with software interfaces since 1945 are remarkably consistent, both within themselves and with what we've learned from other areas of industrial design. Applying these involves difficult tradeoffs in the same way engineering always does, but there is nothing particularly complex or mystical about the rules themselves.

In [TAOUP] we introduced five basic metrics to categorize the quality of interfaces: concision, expressiveness, ease, transparency, and scriptability.

Concise interfaces have a low upper bound on the length and complexity of actions required to do a transaction; the measurement might be in keystrokes, gestures, or seconds of attention required. Concise interfaces get a lot of leverage out of relatively few controls.

Expressive interfaces can readily be used to command a wide variety of actions. Sufficiently expressive interfaces can command combinations of actions not anticipated by the designer of the program, but which nevertheless give the user useful and consistent results.

The difference between concision and expressiveness is an important one. Consider two different ways of entering text: from a keyboard, or by picking characters from a screen display with mouse clicks. These have equal expressiveness, but the keyboard is more concise (as we can easily verify by comparing average text-entry speeds). On the other hand, consider two dialects of the same programming language, one with a Unicode-string type and one not. Within the problem domain they have in common, their concision will be identical; but for anyone concerned with internationalization, the dialect with Unicode characters will be much more expressive.

Ease is inversely proportional to the mnemonic load the interface puts on the user — how many things (commands, gestures, primitive concepts) the user has to remember specifically to support using that interface. Programming languages have a high mnemonic load and low ease; menus and well-labeled on-screen buttons are easier to learn and use, but usually trade away concision or expressiveness for this virtue.

Transparent interfaces don't require the user to remember the state of his problem, his data, or his program while using the interface. An interface has high transparency when it naturally presents intermediate results, useful feedback, and error notifications on the effects of a user's actions. So-called WYSIWYG (What You See Is What You Get) interfaces are intended to maximize transparency, but can sometimes backfire — especially by presenting an over-simplified view of the domain.

Discoverable interfaces make it easy for the user to form and maintain a mental model not of the problem domain, but of the user interface itself. Discoverable interfaces usually leverage the user's understanding of the problem domain to help them figure out the controls. Various forms of assistance like on-screen labels, context sensitive help, or explanatory balloon popups can increase discoverability. But more important are qualities like the ability to experiment without damaging anything, and immediate feedback to let the user know what they just did when they try something. A truly discoverable interface makes the relationship between the controls and the task they perform intuitively obvious, and rewards experimentation rather than punishing it.

These virtues also have negative formulations in terms of staying out of the user's way. A concise interface doesn't get in the way by forcing the user to wade through a lot of waste motion to get the work done. An expressive interface doesn't get in the way by imposing the programmer's preconceptions about what the user is there to accomplish and how they're likely to go about it. An interface with ease doesn't get in the way by requiring users to learn and remember lots of non-obvious controls. A transparent interface doesn't get in the way by obscuring the state of the problem. A discoverable interface doesn't get in the way by requiring the user to attend a training course or read a manual.

For completeness, we'll note that scriptable interfaces is are those which are readily manipulated by other programs. Scriptability reduces the need for costly custom coding and makes it relatively easy to automate repetitive tasks. Scriptability will not be a major theme in this book; see [TAOUP], instead, for an in-depth discussion.

Usability is not one thing. It's actually a relationship between a program and its audiences. A program's usability changes as the audience changes; in particular, best practices for usability engineering change as its audiences' degrees of investment in the task and the software changes. We'll find it useful, later on, to distinguish at least four different kinds of user from each other.

The nontechnical end-user has very little knowledge of either the application domain of your program or of computers and software in general, and is probably not very interested in acquiring more of either. Most users of ATMs (automatic teller machines), for example, fall in this category.

The domain expert has a lot of domain knowledge about the application your program addresses, but is unsophisticated about computers. Domain experts are usually motivated to learn more about their domain, but not necessarily about computers in general.

The power user has a lot of experience with computers and different kinds of software, but limited domain-specific knowledge. Power users (like domain experts) are often willing to learn more about what they are already good at, but may resist acquiring more domain knowledge.

The wizard or guru is strong in both domain-specific and general computer knowledge, and is willing to work at getting more of either.

Of course, the degree to which any one persion is a domain expert varies with the domain. And even wizards don't want to have to be wizards all the time; even a software guru who enjoys programming (say) printer drivers usually just wants to press a button and have the printer print without fuss or fiddling.

Nevertheless, these four categories do define a power curve — nontechnical end-users at the low end, wizards at the high end, domain experts and power users in the middle. Their requirements and the kinds of interfaces that make them comfortable will differ. Just as importantly, an individual's requirements will change as he or she climbs the power curve.

In [TAOUP] we noted that while nontechnical end-users value ease most, wizards will trade that away to get expressiveness and concision. In this book we'll explain and analyze that tradeoff further, focusing on the tension between task-oriented and feature-oriented interfaces.

Many collections of user interface guidelines focus on the visual and presentational level of UI design — representative advice of this kind is to avoid using color alone to convey critical information, because about 10% of users have some variety of color blindness. Advice like this has its place, and we'll convey a good deal of it later in the book, but treatments that go at the UI design problem from the outside in often fail to engage deeper issues. The rules we choose to begin this book with are quite deliberately different — structural and logical, working from the inside out and (we believe) grounded in deep properties of the evolved nature of human beings.

These rules of usability are actually rules for programmers, who (almost by definition) are either power users or wizards. They are therefore aimed at programmers looking down the power curve, designing for an audience of domain experts and nontechnical end-users. But as we've already seen, even wizards are in the end-user position much of the time (especially when operating outside the specific realm of their domain knowledge). Therefore, you should consider these rules applicable for designing any program with an interactive interface.

We'll summarize the rules here, noting parallels with the Unix design rules from The Art of Unix Programming. While these rules are implicit in most good treatments of user-interface design, many have never before been written down explicitly. The rest of this book will unfold the implications and consequences of these rules.

Today's computer users live in an information-saturated society, coping with a frenetic pace of change and living lives that consequently keep their ability to adapt and learn under constant challenge. Under these circumstances, the most precious gift a software designer can give is the luxury of ignorance — interfaces that don't require the consumer to learn another skill, to memorize more details, to shoulder yet another cognitive load.

Simplify, simplify, simplify. Look for features that cost more in interface complexity than they're worth and remove them. When you add features, make them unobtrusive so the user does not have to learn them until he or she is ready (there is a tradeoff here; it's easy to make them too hard to find so that the user never does).

To support the luxury of ignorance, write interfaces that are task-oriented rather than feature-oriented. Task-oriented interfaces walk the user through common sequences of operations, the opposite of the traditional Unix approach that exposes every possible feature switch but forces the user to learn the sequence of operations to accomplish a task. If your program must serve both nontechnical end-users and wizards, then the UI will need to support both task- and feature-oriented interfaces separately. Attempting to combine the two in a single set of controls almost invariably leads to an ugly mess.

One of the effects of an information- and media-saturated world is that people have to budget their attention as well as their learning capacity, and to learn to juggle multiple tasks routinely. Therefore, an equally important gift is the luxury of inattention — interfaces that don't require users to concentrate or change their mental focus from whatever else they are doing. Sometimes this is not a luxury; the task the computer is assisting may demand total attention — but it is nevertheless bad practice to assume that you can get and keep that.

One good test for an interface design is, therefore: can it be worked comfortably while the user is eating a sandwich, or driving a car, or using a cellphone? The real issue here isn't the challenge to physical dexterity but whether the software fits the user's hand and brain well enough to be useful even when the user's attention is divided.

Sometimes juggling multiple tasks won't do. High performance at writing, programming, and other forms of creative work almost requires human beings to enter a what psychologist Mihaly Csikszentmihalyi has dubbed a flow state — intense but relaxed concentration, with total focus on the task.

Flow states, alas, are very fragile. They take time to establish and are easily broken by distractions. Badly-designed UIs are frequent culprits. Blinking text, graphical animations, popup windows, and intrusive sound effects can be nearly as disruptive as the flow state's deadliest enemy, the ringing telephone.[2]

Well-designed interfaces do not clamor for attention or advertise their own cleverness. Rather, they allow the user to maintain a focus on the task. They support concentration and creativity by getting out of the way.

The best user interfaces are so transparent and discoverable that they don't require documentation at all. Relying on documentation to explain your program is an admission that you lack the skill and/or imagination to write an interface that good.

And yes, writing programs that don't need documentation is possible. Computer-game designers manage it all the time, even for games that have command repertoires comparable in complexity to a word processor or spreadsheet. The key is crafting interfaces that only require the user to grasp a few operations initially, and are then highly discoverable and lead the user gradually towards mastery. Macintosh programmers have a well-developed tradition of designing UIs in this style.

The Unix experience is actively misleading when it comes to writing for end-users. Traditionally we provide powerful, feature-rich programs for technically adept and highly motivated users who are well up the power curve, and we're very good at that. Those users can benefit from documentation, especially the kind of terse but complete reference found on a good Unix manual page. Novices and nontechnical end-users at the bottom of the power curve are, however, a very different audience and demand different design assumptions.

It is not quite true that end-users never read documentation, but it is true that those who need documentation most are the least motivated and least likely to read it. Also, far too many of the (relatively few) programmers who write documentation fall into using “It's in the documentation!” as an excuse for opaque and poorly-thought-out UI designs.[3] If the Unix community is going to take usability seriously, this kind of laziness can no longer be acceptable.

For both these reasons, you should learn to treat documentation as an admission of failure — sometimes necessary for advanced topics and reference, but never to be relied upon in educating novices and nontechnical end-users.

If the easiest programs to use are those that demand the least new learning from the user, then the easiest programs to use will be those that most effectively connect to the user's pre-existing knowledge.

Therefore, avoid gratuitous novelty and excessive cleverness in interface design. If you're writing a calculator program, ‘+’ should always mean addition! When designing an interface, model it on the interfaces of functionally similar or analogous programs with which your users are likely to be familiar. More generally, the best interface designs match a pre-existing model in the minds of their user populations.

It is especially important to be conservative and imitative about minor features that users have a relatively low incentive to learn. If you must invent a novel way of doing things, the least bad place to do it is right at the center of your design. Suppose, for example, that you are writing a program to generate reports from a database. Writing your own font-selection dialogue would be a bad idea, even if trying a radical new approach to specifying database searches might be a good one.[4]

(This is similar to the Rule of Least Surprise from [TAOUP]. It is also widely known under a slightly different title, as the Principle of Least Astonishment.)

In order to apply this rule, you need to know your audience — to have a model of how your users think and react. If your program has more than one potential audience, you need a mental model for each one.

Modeling your audience in the abstract is surprisingly difficult. To do it you have to step outside your assumptions and forget what you know, and programmers are notoriously bad at this. Fortunately, there is a simple tactic that makes this much easier; put a face on the problem. To serve an audience, make up a fictional character representative of that audience. Tell stories about that character and use those stories to get inside the character's mind (this technique is very like method acting).

Hidden state denies users the luxury of inattention, because it means they have to hold things in their heads. An example familiar to many Unix users is older versions of the vi editor, which had no visual indication of whether one was in text-insertion or command mode. It was notoriously easy to forget and insert garbage — or, worse, to perform an unintended edit operation by attempting to insert text.[5]

Mindspace is much more scarce and precious than screen space. Any piece of hidden state your program has is competing for one of those 7±2 slots in short-term memory (see the Rule of Seven below and the extended discussion of this number in the Wetware chapter). In a Unix environment, your program is likely to be one of several among which the user is time-sharing his attention; this makes competition for those slots all the fiercer. But interface design is not a game to be won by claiming those slots — to the contrary, you've done your job best when the user is freed to allocate them himself.

(This is analogous to, but somewhat different from, the Rule of Transparency in [TAOUP].)

This rule could be taken as a consequence of the Rule of Transparency, but violations are so common and such an important cause of interface problems that we have chosen to foreground it by making it a separate rule.

An interface with “modes” responds to the same input gesture in different ways depending either on hidden state not visible to the user, or on where you are within the interface. Since the early days of the Macintosh, which eliminated mode dependencies almost completely, it has been widely understood that modes are a bad idea.

The classic bad example in Unix-land is vi(1), a commonly-used editor in which typed characters are either inserted before the cursor or interpreted as editor commands, depending on whether or not you are in insert mode. This is a well-known cause of confusion to novices, and even experts at this editor occasionally trash portions of their documents through having lost track of what mode they are in or inadvertently switched modes. Modern versions of vi(1), partly mitigate this design flaw by providing a visible mode indicator. We know this is not a complete solution, because even expert users can overlook the state of the mode indicator and find themselves in trouble.

We'll see in the Wetware chapter that modes interfere with the process of habituation by which users become expert with interfaces; indeed, they turn habituation into a trap. A computing system ideally designed for human use would have one single set of gestures and commands that are unformly applicable and have consistent meanings across its entire scope.

The “Working storage” we're referring to is human short-term memory; the analogy to working storage in a virtual-memory operating system is (as we'll see in the Wetware chapter) both deliberate and fruitful.

This magic threshold of seven items has many applications in UI design. We've already noted one, which is that tracking any hidden state in a program is going to take up one or more of these slots.

It also implies a restriction on how many controls you should have visible at any one time. While users may be able to visually recognize more than seven controls, actually using them will involve refreshing short-term memory with retrieved knowledge about them. If that retrieved knowledge doesn't fit easily in the working store, the cognitive cost of using the interface will go up sharply.

(We'll develop more consequences of the magic number seven in the Wetware chapter.)

Users make mistakes. An interface that doesn't support backing gracefully out of those mistakes is not a friendly one. Furhermore, absence of an undo inhibits users from exploring and mastering an interface, because that absence makes them wary of doing something irreversibly bad.

Sometimes irreversibility is unavoidable. When you send a mail message there is a commit point past which, having handed a message to the mail infrastructure. you cannot recall it. When you format a hard drive you are physically erasing information that cannot be reconstructed. There are a few applications, such as on-line payment systems, in which irreversibility is a design requirement.

But these are the exceptions, not the rule. Most applications are more like editing text or graphics; the program is a tool with which the user shapes or transforms some kind of database or document. The inexpensive storage and computing power of today's machines allows editor-like applications to keep complete change histories for at least the current session (if not for the entire life of the database/document), and to allow the user to move back and forward in those histories. This is better UI design than having lots of irreversible commit points with confirmation prompts.

On those occasions when you cannot avoid irreversible commit points, it is very bad practice to have confirmation prompts for which the normal answer is “Yes, proceed onwards”. Thus, routine confirmation prompts are a bad idea; when confirmation prompts happen at all, they should always be surprises that make the user stop and think.

The problem with routine confirmation prompts is that they condition users to reflexively click “yes” just to get over that hurdle. There always comes a day when that reflexive “yes” leads to grief. Don't train your users to have bad reflexes, because even if it doesn't cause them problems when using your program, it may well lead them into destructive errors while using someone else's.

An important aspect of discoverability that often gets short shrift is that it should be easy to learn from failure as well as success. A special hell awaits the designers of programs whose response to errors is a message or popup giving a hex code, or one cryptic line that simply says “An error occurred...”.

Documentation can fail this way, too. The most frustrating and useless documentation is the kind that describes in exact detail what normal operation is like (complete with pretty color screenshots of all the screens the user is going to see anyway), but gives no hints about how to recover when an operation does not succeed. And the uttermost depths of bad user experiences are plumbed by programs that fail cryptically, then point the user at documentation that doesn't explain how to cope.

In a well-designed UI, all failures are informative. There are no brick walls; the user always has a place to go next and learn more about the failure and how to recover from it.

One of Unix's oldest and most persistent design rules is that when a program has nothing interesting or surprising to say, it should shut up. Well-behaved programs do their jobs unobtrusively, with a minimum of fuss and bother. Silence is golden.

(This is the Rule of Silence from [TAOUP].)

This rule could be considered a derivative of the Rule of Flow. Many other programming traditions routinely violate it, fatiguing users with a barrage of spurious interrupts that carry little or no real information. Sometimes this happens because the interface designer can't resist showing off his technique. More often, it happens because a lot of activity is good for grabbing the customer's attention at a demo or sales pitch, and the software is designed to be sold rather than used.

When you can't be silent, be clear but unobtrusive. Having part of your program's display quietly change color and display a message is better than a loud, noisy popup that forces the user to take his mind off the task in order to dismiss the popup.

The very height of perversity is reached by programs that interrupt the user's concentration under the pretext of being helpful — in a society that really valued flow states and respected concentration, the inventor of the animated ‘assistant’ in Microsoft Word would have been torn to pieces by an enraged mob. If you remember that allowing the luxury of attention is an important part of your job as a software designer, you won't repeat the Clippy mistake.

A well-designed hardware/software combination is a mind amplifier in precisely the same sense that a lever is a muscle amplifier, The point of computers is to free humans from having to do low-level calculation and information-shuffling — to serve humans. Every time you require a human user to tell a computer things that it already knows or can deduce, you are making a human serve the machine.

Any time you make the user enter magic data that is used solely for bookkeeping within or between computers, you are violating this rule. A prime example is IPV4 dot-quad addresses; the fact that users occasionally have to type these is a clear-cut failure in UI design. Indeed, the fact that users ever have to know about numeric IP addresses at all (as opposed to tagging their network hosts by name or having IP addresses be autonfigured) is a UI design failure of some magnitude. It has not normally been thought of as one, because most of the burden falls on network adminstrators and programmers; but techies are people too, and the amount of skilled time that has been wasted because IP addresses are difficult to remember is undoubtedly immense.

Another excellent reason not to require users to tell the computer anything twice is that it invites inconsistencies. Suppose for example that your program requires the user to enter his/her email address in two different places; this creates the certainty that someday some user is going to typo one of them, or update one and forget the other.

The chance that inconsistencies of this kind will be spotted and fixed before they do damage falls as the number of places the data can be inconsistent rises. Database designers have a rule that there should be a unique SPOT (Single Point of Truth) for each piece of data; good user interfaces have the same property for the same reason.

Autodetection is the best thing — the ideal user interface deduces what the user wants to do and does it. But autodetection can become a problem if the computer guesses wrong and there is no way to override the guess. This is one of the most common and damaging UI mistakes, especially among designers who don't have the Unix-tradition bias towards allowing users to configure everything.

Therefore, the best thing is usually for autodetection to supply defaults which the user can override. One of the central design issues for any good UI is how deep to bury the place where overriding any given parameter is possible. If it's too close to the immediately-accessible front of the interface, the user will often be burdened with a detail that is not normally relevant. If it's buried too far in the back (down a deep tree of menus or options), the user may never find it even when he/she needs it.

In [TAOUP], a book about designing programs, we emphasized that “Keep It Simple, Stupid!” is the unifying meta-principle behind Unix's design rules. in part because programmers all too frequently overestimate their own capacity to handle code complexity. KISS is the unifying thread in designing good user interfaces, too, if for a slightly different reason: interface designers (especially if they are programmers) tend to overestimate everyone else's capacity to handle interface complexity as well.

With user interfaces as with code, simple designs are actually more intelligent because they avoid the edge cases and failure modes that dog complex designs. But many programmers who understand this about the internals of code are, unfortunately, resistant to the same insight when it comes to UI designs — they will cheerfully pile on controls, each one defensible in itself, until the overall complexity of the interface makes it nigh-impossible for anyone but a programmer already involved with the development to understand.

The only effective counter to this tendency is a ruthless commitment to keeping interfaces simple — simpler than they would need to be for the developer's own use.

Keeping things simple does not equate to dumbing them down. Removing capabilities or obsessively handholding the user in order to “simplify” an interface is a poor substitute for packaging its capabilities in a clean and usable way. But it is equally misguided and lazy to attack simplifications of an interface by claiming that they necessarily dumb it down. The test for a good simplification should always be the same — whether or not it makes the user experience better — and that test should be checked with real users.

Remember that predictability — a user's ability to correctly model the operation of the program and assimiliate it to what he or she already knows — is far more important than visual appeal, and that you don't get usability from mere prettiness. Beware of pushing pixels around too much.

One of the most common failure modes in UI design is to create interfaces that are superficially pretty but difficult to use (horrible examples abound, and we will cite some later in this book). Conversely, there are interfaces that are visually cluttered and ugly but highly usable (we'll cite examples of this as well). The latter failure mode is less common, because it is much easier to polish the appearance of a UI than it is to engineer in better usability.

Far too many programmers who would never consider shipping a library without a test suite are somehow willing to ship programs that feature an interactive UI without testing them on real users. This is the blunder in practice that makes violations of all the other rules keep on giving pain — developers don't test, so they either never find out how bad their programs' usability is or find it out after they have too much invested in the interface design to change it easily.

User testing later in the process will be much less an ordeal if you make some effort to be in contact with end-user reality while you're designing. Go out and talk to people who are likely to use the thing. Slap together a quick prototype and get them to complain about it at length. Get a piece of paper and ask them to draw the interface they want with a pencil. You don't have to implement exactly what they say, but it lets you know what they're thinking rather than guessing at it.

Another way to make the test phase more rapid and effective is to apply heuristic evaluation after you have a prototype but before you start looking for test subjects. Later in this book we'll describe a way to do that, which is not a substitute in itself for end-user testing but can go a long way in reducing the amount of it you'll need before converging on a good result.

After settling on the term “usability engineering” and writing down all but one of the design rules in the previous section, we discovered that there had been one previous attempt widely respected among specialists in the field to capture what is known about software interface design in a collection of rules. This was a set of heuristics proposed in a 1990 paper [Nielsen&Molich] and further developed in Jakob Nielsen's 1994 book Usability Engineering [Nielsen].

Upon inspection, we discovered that our approach converges with Nielsen's to a remarkable degree. The few marked differences are as instructive as the similarities. We'll therefore walk through Nielsen's heuristics here, and later in this book we'll propose an adaptation of the Nielsen-Molich heuristic evaluation method that should be readily applicable even given the decentralized organization characteristic of many of today's Unix projects.

- Visibility of system status

The system should always keep users informed about what is going on, through appropriate feedback within reasonable time.

Comment: Corresponds to our Rule of Transparency.

- Match between system and the real world

The system should speak the users' language, with words, phrases and concepts familiar to the user, rather than system-oriented terms. Follow real-world conventions, making information appear in a natural and logical order.

Comment:Corresponds to our Rule of Least Surprise.

- User control and freedom

Users often choose system functions by mistake and will need a clearly marked "emergency exit" to leave the unwanted state without having to go through an extended dialogue. Support undo and redo.

Comment: Corresponds to our Rule of Reversibility

- Consistency and standards

Users should not have to wonder whether different words, situations, or actions mean the same thing. Follow platform conventions.

Comment: Corresponds to our Rule of Modelessness.

- Error prevention

Even better than good error messages is a careful design which prevents a problem from occurring in the first place.

Comment: We have no rule that directly corresponds.

- Recognition rather than recall

Make objects, actions, and options visible. The user should not have to remember information from one part of the dialogue to another. Instructions for use of the system should be visible or easily retrievable whenever appropriate.

Comment: Corresponds to our Rule of Transparency.

- Flexibility and efficiency of use

Accelerators — unseen by the novice user — may often speed up the interaction for the expert user such that the system can cater to both inexperienced and experienced users. Allow users to tailor frequent actions.

Comment: We have no rule that directly corresponds.

- Aesthetic and minimalist design

Dialogues should not contain information which is irrelevant or rarely needed. Every extra unit of information in a dialogue competes with the relevant units of information and diminishes their relative visibility.

Comment: Corresponds to our Rule of Silence.

- Help users recognize, diagnose, and recover from errors

Error messages should be expressed in plain language (no codes), precisely indicate the problem, and constructively suggest a solution.

Comment: Corresponds to our Rule of Failure

- Help and documentation

Even though it is better if the system can be used without documentation, it may be necessary to provide help and documentation. Any such information should be easy to search, focused on the user's task, list concrete steps to be carried out, and not be too large.

Comment: Corresponds to our Rule of Documentation and Rule of Failure, adding mores specific advice about documentation style.

Missing from Nielsen's heuristics are the Rule of Bliss, the Rule of Distraction, the Rule of Flow, the Rule of Seven, the Rule of Confirmation, the Rule of Automation, the Rule of Defaults, the Rule of Respect, and the Rule of Reality; however, the Rule of Reality is strongly implicit in the rest of the Nielsen-Molich method. We have no rule that directly corresponds to Nielsen's heuristics “Error prevention” and “Flexibility and efficiency of use”

Part of the reason we set more rules is that we have a decade more of experience, during which some fundamentals of the problem have become clearer. Another reason, though, is that Nielsen's approach is like that of most other interface design gurus (such as Bruce Tognazzini, today's principal exponent of the Macintosh style) in that it works from the outside inwards, where ours works from the inside outwards. We are more influenced both by the Unix tradition of system design from the internals outwards, and by attacks on the UI design problem like [Raskin] that seek to generate design rules not just by empirical observation but from considerations of the deep structure of human cognition.

As we learn more, the similarities between outside-in prescriptions like Jakob Nielsen's and Bruce Tognazzini's (on the one hand) and inside-out prescriptions like ours and Jef Raskin's (on the other) increase. The most basic thing both approaches have in common is the understanding that, ultimately, excellence in user-interface design comes from identifying with the user's experience.

Keeping the internals of code simple is at the heart of what it means to think like a programmer, especially a Unix programmer. But keeping interfaces simple demands the opposite discipline — learning how not to think like a programmer, and instead thinking like the end-users that you are serving. Interfaces that are really smart match the needs of those users.

Identifying with the end-user is a difficult and humbling task for many programmers. But if you nerve yourself to the effort, you may find great satisfaction in writing programs which are as responsive and intuitive from the outside as they are elegant within. The ultimate test of software (as with any other tool) is how well it serves human beings, and it is in serving other human beings that we reach the true zenith of craftsmanship.

If that's too abstract and idealistic for you, think of this. No matter how skilled you are, there are many times when you will be the end user. When you give yourself standing to demand good UI design from others by setting an example of good UI design yourself, the time and sanity you save will ultimately be your own. At the zenith of craftsmanship, through serving others you serve yourself — and vice-versa.

To reach that zenith, go back to zero. In [TAOUP] we used some ancient ideas from Zen Buddhism to illuminate the craft of writing code. Here is one that applies to user-interface design: beginner's mind. “Beginner's mind” is the stance that approaches a task with no assumptions, no habits, perfect ignorance awaiting experience. Achieving beginner's mind involves forgetting what you know, so that you can learn beyond the limits of what you know.

To design interfaces for end-users — to keep them simple, stupid — approach the interface-design task with beginner's mind.

[2] Or the person from Porlock...

[3] You should then write the documentation anyway, as an exercise in humility, because nobody's perfect. Just don't rely on anyone ever reading it except in very unusual circumstances.

[4] The insight that the best place to innovate is right at the center comes from [Lewis&Rieman].

[5] Newer versions of vi have a visible insert-mode indicator.

Table of Contents

Show me the face you had before you were born.

-- Traditional Rinzai Zen koanSoftware designers who don't understand history often find themselves doomed to repeat it, often more expensively and less productively than the first time around. So it's worth taking a look at the history of user-interface design to see what kinds of trends and patterns we can discern that might still inform today's practice. We'll draw some specific lessons from this history, but many others await the discerning reader.

One of the largest patterns in the history of software is the shift from computation-intensive design to presentation-intensive design. As our machines have become more and more powerful, we have spent a steadily increasing fraction of that power on presentation. The history of that progression can be conveniently broken into three eras: batch (1945-1968), command-line (1969-1983) and graphical (1984 and after). The story begins, of course, with the invention[6] of the digital computer. The opening dates on the latter two eras are the years when vital new interface technologies broke out of the laboratory and began to transform users' expectations about interfaces in a serious way. Those technologies were interactive timesharing and the graphical user interface.

In the batch era, computing power was extremely scarce and expensive. The largest computers of that time commanded fewer logic cycles per second than a typical toaster or microwave oven does today, and quite a bit fewer than today's cars, digital watches, or cellphones. User interfaces were, accordingly, rudimentary. Users had to accommodate computers rather than the other way around; user interfaces were considered overhead, and software was designed to keep the processor at maximum utilization with as little overhead as possible.

The input side of the user interfaces for batch machines were mainly punched cards or equivalent media like paper tape. The output side added line printers to these media. With the limited exception of the system operator's console, human beings did not interact with batch machines in real time at all.

Submitting a job to a batch machine involved, first, preparing a deck of punched cards describing a program and a dataset. Punching the program cards wasn't done on the computer itself, but on specialized typewriter-like machines that were notoriously balky, unforgiving, and prone to mechanical failure. The software interface was similarly unforgiving, with very strict syntaxes meant to be parsed by the smallest possible compilers and interpreters.

Once the cards were punched, one would drop them in a job queue and wait. Eventually. operators would feed the deck to the computer, perhaps mounting magnetic tapes to supply a another dataset or helper software. The job would generate a printout, containing final results or (all too often) an abort notice with an attached error log. Successful runs might also write a result on magnetic tape or generate some data cards to be used in later computation.

The turnaround time for a single job often spanned entire days. If one were very lucky, it might be hours; real-time response was unheard of. But there were worse fates than the card queue; some computers actually required an even more tedious and error-prone process of toggling in programs in binary code using console switches. The very earliest machines actually had to be partly rewired to incorporated program logic into themselves, using devices known as plugboards.

Early batch systems gave the currently running job the entire computer; program decks and tapes had to include what we would now think of as operating-system code to talk to I/O devices and do whatever other housekeeping was needed. Midway through the batch period, after 1957, various groups began to experiment with so-called “load-and-go” systems. These used a monitor program which was always resident on the computer. Programs could call the monitor for services. Another function of the monitor was to do better error checking on submitted jobs, catching errors earlier and more intelligently and generating more useful feedback to the users. Thus, monitors represented a first step towards both operating systems and explicitly designed user interfaces.

Command-line interfaces (CLIs) evolved from batch monitors connected to the system console. Their interaction model was a series of request-response transactions, with requests expressed as textual commmands in a specialized vocabulary. Latency was far lower than for batch systems, dropping from days or hours to seconds. Accordingly, command-line systems allowed the user to change his or her mind about later stages of the transaction in response to real-time or near-real-time feedback on earlier results. Software could be exploratory and interactive in ways not possible before. But these interfaces still placed a relatively heavy mnemonic load on the user, requiring a serious investment of effort and learning time to master.

Command-line interfaces were closely associated with the rise of timesharing computers. The concept of timesharing dates back to the 1950s; the most influential early experiment was the MULTICS operating system after 1965; and by far the most influential of present-day command-line interfaces is that of Unix itself, which dates from 1969 and has exerted a shaping influence on most of what came after it.

The earliest command-line systems combined teletypes with computers, adapting a mature technology that had proven effective for mediating the transfer of information over wires between human beings. Teletypes had originally been invented as devices for automatic telegraph transmission and reception; they had a history going back to 1902 and had already become well-established in newsrooms and elsewhere by 1920. In reusing them, economy was certainly a consideration, but psychology and the Rule of Least Surprise mattered as well; teletypes provided a point of interface with the system that was familiar to many engineers and users.

The widespread adoption of video-display terminals (VDTs) in the mid-1970s ushered in the second phase of command-line systems. These cut latency further, because characters could be thrown on the phosphor dots of a screen more quickly than a printer head or carriage can move. They helped quell conservative resistance to interactive programming by cutting ink and paper consumables out of the cost picture, and were to the first TV generation of the late 1950s and 60s even more iconic and comfortable than teletypes had been to the computer pioneers of the 1940s.

Figure 2.3. VT100 terminal.

The VT100, introduced in 1978, was probably the single most popular and widely imitated VDT of all time. Most terminal emulators still default to VT100 mode.

Just as importantly, the existance of an accessible screen — a two-dimensional display of text that could be rapidly and reversibly modified — made it economical for software designers to deploy interfaces that could be described as visual rather than textual. The pioneering applications of this kind were computer games and text editors; close descendants of some of the earliest specimens, such as rogue(6), and vi(1), are still a live part of Unix tradition.

Screen video displays were not entirely novel, having appeared on minicomputers as early as the PDP-1 back in 1961. But until the move to VDTs attached via serial cables, each exceedingly expensive computer could support only one addressable display, on its console. Under those conditions it was difficult for any tradition of visual UI to develop; such interfaces were one-offs built only in the rare circumstances where entire computers could be at least temporarily devoted to serving a single user.

We took the the trouble to describe batch computing in some detail because in 2004 this style of user interface has been dead for sufficiently long that many programmers will have no real idea what it was like. But if some of the above seems nevertheless familiar, it may be be because many of the behavioral characteristics of batch systems are curiously echoed by a very modern technology, the World Wide Web. The reasons for this have lessons for UI designers.

JavaScript, Java, and Flash support limited kinds of real-time interactivity on web pages. But these mechanisms are fragile and not universally supported; the Common Gateway Interface — Web forms — remains the overwhelmingly most important way for web users to do two-way communication with websites. And a Web form fed to a CGI behaves much like the job cards of yesteryear.

As with old-style batch systems, Web forms deliver unpredictable turnaround time and cryptic error messages. The mechanisms for chaining forms are tricky and error-prone. Most importantly, web forms don't give users the real-time interactivity and graphical point-and-shoot interface model they have become used to in other contexts. Why is this?

Batch systems were an adaptation to the scarcity of computer clock cycles; the original computers had none to spare, so only a bare minimum went to impedance-matching with the brains of humans. Web forms are primitive for an equally good reason, but the controlling scarcity was one of network bandwidth. In the early 1990s when the Web was being designed, the cabling and switching fabric to support millions of real-time-interactive remote sessions spanning the planet did not exist. The deficit wasn't so much one of bandwidth (available bits per second of throughput) but of latency (expected turnaround time for a request/response).

The designers of CGI knew most of their users would be using connections with serious latency problems, on communications links that often dropped out without warning. So they didn't even try for real-time interactivity. Instead, the interaction model for the Web in general and web forms in particular is a discrete sequences of requests and responses, with no state retained by the server between them.

The batch-to-CGI correspondence is not perfect. The batch analog of dropped connections — permanent interruptions in the act of feeding cards into a machine, as opposed to just unpredictable delays — was relatively rare. And one of the reasons the CGI model is stateless on the server side is because retaining even small amounts of session state can be cost-prohibitive when you might have thousands or millions of users to deal with daily, not a problem batch systems ever had. Still, the analogy does help explain why the Web was not designed for real-time interactivity.

Today, in 2004, it is largely demand for the Web that has funded the build-out of the Internet to the point where massive real-time interactivity is thinkable as more than a pipe dream. We're still not there; latency and bandwidth constraints are still severe, as anyone who has watched the slow and stuttering progress of a video download can attest.

The lesson here is that the batch processing style is still adaptive when latency is large and unpredictable. We may almost solve that problem for the planetary Web in the next few decades, but there are fundamental physical reasons it cannot be banished entirely. The lightspeed limit is perhaps the most fundamental; it guarantees, among other things, that round-trip latency between points on the Earth's surface has a hard lower bound of a bit over a seventh of a second. [7] In practice, of course, switching and routing and computation add overhead. Nor would it be wise to assume that the Internet will forever remain limited to Earth's surface; indeed, satellite transmission has been handling a significant percentage of international traffic since the 1970s.

The command-line style has also persisted, for reasons we discussed in depth in [TAOUP]. It will sufficient to note here that the reasons for the survival of this style are not just technical constraints but the fact that there are large classes of problems for which textual, command-line interfaces are still better-suited than GUIs. One of the distinguishing traits of Unix programmers is that they have retained sophisticated styles of command-line design and already understand these reasons better than anyone outside the Unix tradition, so we will pass over pro-CLI arguments lightly in this book.

There is a subtler lesson to be drawn from these survivals. In software usability design, as in other kinds of engineering, it is seldom wise to dismiss an apparently clumsy or stupid design by assuming that the engineers of bygone days were idiots. Though engineers, being human, undeniably are idiots on occasion, it is far more likely in the normal course of events that a design you find ridiculous after the fact is actually an intelligent response to tradeoffs you have failed to understand.

There were sporadic experiments with what we would now call a graphical user interface as far back as 1962 and the pioneering SPACEWAR game on the PDP-1. The display on that machine was not just a character terminal, but a modified oscilloscope that could be made to support vector graphics. The SPACEWAR interface, though mainly using toggle switches, also featured the first crude trackballs, custom-built by the players themselves.[8]. ITen years later, in the early 1970s these experiments spawned the video-game industry, which actually began with an attempt to produce an arcade version of SPACEWAR.

The PDP-1 console display had been descended from the radar display tubes of World War II, twenty years earlier, reflecting the fact that some key pioneers of minicomputing at MIT's Lincoln Labs were former radar technicians. Across the continent in that same year of 1962, another former radar technician was beginning to blaze a different trail at Stanford Research Institute. His name was Doug Engelbart. He had been inspired by both his personal experiences with these very early graphical displays and by Vannevar Bush's seminal essay As We May Think [Bush], which had presented in 1945 a vision of what we would today call hypertext.

In December 1968, Engelbart and his team from SRI gave a 90-minute public demonstration of the first hypertext system, NLS/Augment.[9] The demonstration included the debut of the three-button mouse (Engelbart's invention), graphical displays with a multiple-window interface, hyperlinks, and on-screen video conferencing. This demo was a sensation with consequences that would reverberate through computer science for a quarter century, up to and including the invention of the World Wide Web in 1991.

So, as early as the 1960s it was already well understood that graphical presentation could make for a compelling user experience. Pointing devices equivalent to the mouse had already been invented, and many mainframes of the later 1960s had display capabilities comparable to those of the the PDP-1. One of your authors retains vivid memories of playing another very early video game in 1968, on the console of a Univac 1108 mainframe that would cost nearly forty-five million dollars if you could buy it today in 2004. But at $45M a throw, there were very few actual customers for interactive graphics. The custom hardware of the NLS/Augment system, while less expensive, was still prohibitive for general use. Even the PDP1, costing a hundred thousand dollars, was too expensive a machine on which to found a tradition of graphical programming.

Video games became mass-market devices earlier than computers because they ran hardwired programs on extremely cheap and simple processors. But on general-purpose computers, oscilloscope displays became an evolutionary dead end. The concept of using graphical, visual interfaces for normal interaction with a computer had to wait a few years and was actually ushered in by advanced graphics-capable versions of the serial-line character VDT in the late 1970s.

If full vector graphics and the custom hardware needed for systems like NLS/Augment was too expensive for general use, character VDTs were too crude. Today's nethack(6) game, run on a color terminal emulator or console, is well representative of the best that advanced VDTs of the late 1970s could do. They hinted at what was possible in visual-interface design, but proved inadequate themselves.

There were several reasons character VDTs came up short that bear on issues still relevant to today's UI designers. One problem was the absence of an input device that was well matched to the graphics display capability; several early attempts, such as light pens, graphic tablets, and even joysticks, proved unsatisfactory. Another was that it proved difficult to push enough bits per second over the line to do serious graphics. Even after VDTs acquired the capability to write pixels as well as formed characters, running GUIs on them remained impractical because serial lines had a peak throughput far too low to support frequent screen repainting.

For reasonable update speed, graphics displays really need to be coupled more closely to the machine that is doing the rendering than a serial connection will allow. This is especially true if one needs to support the kind of high interactivity and frequently changing displays characteristic of games or GUIs; for this, only direct memory access will do. Thus, the invention of the GUI had to wait until developments in silicon integrated circuits dropped the cost of computing power enough that a capable processor could be associated with each display, and the combination had become sufficiently inexpensive that machines could be dedicated to individuals' use.

The other missing piece was Engelbart's invention of the mouse — and, just as significantly, the visible mouse pointer. This more controllable inversion of the large, crude early trackballs meant that users could have a repertoire of two-dimensional input gestures to match the two-dimensional screen. It made interfaces based on direct visual manipulation of objects on-screen feasible for the first time.

Figure 2.4. The Xerox Alto.

The input device on the left appears to be a touch tablet, a mouse alternative similar to the trackpads on modern portables.

NLS/Augment had shown what was possible, but the engineering tradition behind today's GUIs was born at the Xerox Palo Alto Research Center (PARC) around the same time character-cell VDTs were becoming generally available in the rest of the world. Inspired by Engelbart's 1968 demo, in 1973 the PARC researchers built a pioneering machine called the Alto that featured a bit-mapped display and a mouse. and was designed to be dedicated to the use of one person. It wasn't called either a “workstation” or a “personal computer”, but it was to become a direct ancestor of both. (At around the same time PARC gave birth to two other technologies that would grow in importance along with the GUI; the Ethernet and the laser printer.)

From today's post-Alto point of view, screen shots of the Alto UI show a curious mix of modernity and crudity. What's present are all the logical components of GUIs as we know them — icons, windows, scrollbars, sliders, and the like. The main GUI element missing, in fact, is the pull-down menu (introduced by the Apple Lisa in 1979). What's missing, most conspicuously, is color. Also, the pseudo-3D sculptural effects of modern GUI buttons and other impedimenta are missing; the widgets are flat outline boxes, and the whole resembles nothing so much as an etch-a-sketch drawing of a modern interface. It's a little sobering to reflect that most of what we have learned to add to GUIs since 1973 is eye candy.

It was not until the early 1980s that the implications of the PARC work would escape the laboratory and start to really transform human-computer interaction. There is no shortage of good accounts of that transformation, but most of them tend to focus on personal computers and the history of Apple, giving scant notice to the way the change interacted with and affected the Unix tradition. After 1990, however, and especially after 2000, the stories of Unix, the PC, and the GUI began to re-converge in ways that would have deeply surprised most of their early partisans. Today, a Unix-centered take on the history of user interfaces, even GUIs, turns out to be a much less parochial view than one might have supposed ten or even five years ago.

The entire story is a marvelous lesson for user-interface designers in how design innovation doesn't happen in a vacuum. UI design is no more separable than other forms of engineering and art from the accidents of history and the constraints of economics; understanding the complex and erratic way that we got where we are may be a real help to readers who want to think about their design problems not simply as exercises but as responses to human needs.

One skein of the story begins with the internal developments at Xerox PARC. The Alto begat the Dolphin, Dorado, Dandilion, Dragon, and Danditiger (an upgrade of the Dandilion). These “D-machines” were a series of increasingly powerful computers designed to exchange information over a prototype Ethernet. They had bitmapped displays and three-button mice. They featured a GUI built around overlapping windows, first implemented on the Alto as a workaround for the small size of its display. They had early connections to the ARPANET, the predecessor of the Internet.

These machines were tremendously influential. Word of them spread through the computer-science community, challenging other groups of designers to achieve similarly dramatic capabilities. Famously, in 1979 Steve Jobs was inspired to start the line of development that led to the Apple Macintosh after visiting PARC and seeing the Alto and D-machines in action there. Less often told is that Jobs had been pre-primed for his epiphany by Apple employee Jef Raskin, whose 1967 thesis had inspired some of the PARC research. Raskin had a keen interest in advanced UI design and wanted to find some of the PARC concepts a home at Apple so he could continue pursuing them.

One of the effects of the Alto was to popularize bit-mapped rather than vector-graphics displays. Most earlier graphics hardware had been designed to explicitly draw points, lines, arcs, and formed characters on a display, an approach which was relatively slow but economical because it required a minimum of expensive memory. The Alto approach was to hang the expense and drive each screen pixel from a unique location in a memory map. In this model almost all graphics operations could be implemented by copying blocks of data between locations in memory, a technique named BitBlt by its inventors at PARC. BitBlt simplified graphics programming enormously and played a vital role in making the Alto GUI feasible.

The very first commercialization of this technology seems to have been a machine called the Perq[10] aimed primarily at scientific laboratories. The Perq's dates are difficult to pin down, but an early sales brochure [11] seems to establish that these machines were already being sold in August 1979; other sources claim that due to production delays they first shipped in November 1980. The Perq design featured the same style of portrait-mode high resolution display as the Alto. It was quite a powerful machine for its time, using a microcoded bit-slice processor with a dedicated BitBlt instruction, and some Perqs remained in use as late as 2001. It supported at least five operating systems, including (later in the 1980s) at least three in the Unix family. Curiously, however, the designers seem to have discarded the mouse and retained only the touch tablet.

The inventors of Unix at Bell Labs were not slow to take notice of the Alto and its BitBlt technique. In 1981 they built a machine originally called the “Jerq”[12] and later renamed to “Blit” at management insistance. Like the Alto the Blit had a mouse, a bit-mapped screen, and a powerful local processor (in this case, a Motorola 68000). Unlike the Alto or Perq, it was designed to act as a smart terminal to a Unix minicomputer rather than for a network communicating directly with peer machines.

This difference in architecture reflected a basic difference in aims. While the PARC crew was free to reinvent the world, the Unix developers had history they were not interested in discarding. Plan 9, their successor to the Unix operating system, retained the ability to run most Unix code. The Blit, later commercialized at the AT&T 5620, was an amphibian — it could act as a conventional smart terminal, or it could download software from its host machine that would give it many of the GUI capabilities of an Alto or D-machine. Outside of Bell Labs and the special context of Plan 9 this amphibian was a solution that never found a problem, and the Unix community's first attempt at integrating a PARC-style interface sank into obscurity.

A few years later, however, Blit-like machines with local-area-network jacks and the character-terminal features discarded would rise again, as the X terminal.

That same year, in 1981, Xerox finally brought a machine based on the PARC technology to market. It was called the Xerox Star,[13] and it was a failure. Technically, it was woefully underpowered, slow, and overpriced. Hesitant and inept marketing did not help matters. Many of the developers, sensing more willingness from Apple and other emerging PC companies to push the technology, had started to bail out of PARC in 1980; the failure of the Star accelerated the process. Xerox, despite having pioneered the GUI and several of the other key technologies of modern computing, never turned a profit from them.

Figure 2.7. Screen shot from a Star (1981).

The Star interface pioneered the desktop metaphor that would be ubiquitous in later GUIs.

The third key event of 1981 was the first IBM personal computer. This did not advance the state of the art in GUIs — in fact, the original PC-1 had graphics capability available only as an extra-cost option, and no mouse. Its slow 8088 microprocessor could not have supported a PARC-style GUI even if the will and the design skill had been there to produce one. Even then, however, it was obvious that IBM's entry into the market would eventually change everything.